18.09.2025

Deepfakes Explained: Types, Risks, and How to Detect Them

A few years ago, most people had never heard of the term deepfake. Today, it’s hard to avoid. From viral videos to online scams, deepfakes are increasingly shaping how we consume and trust digital content.

A few years ago, most people had never heard of the term deepfake. Today, it’s hard to avoid. From viral videos to online scams, deepfakes are increasingly shaping how we consume and trust digital content.

The technology behind them is both fascinating and alarming. On one hand, it demonstrates the incredible capabilities of modern artificial intelligence. On the other, it creates new risks for fraud, misinformation, and identity theft that businesses, governments, and individuals must urgently address.

Understanding how deepfakes work, where they can be beneficial, and how to detect them is essential for anyone navigating the modern digital landscape.

This article takes a closer look at what deepfakes are, how they are created, the different types that exist, their positive and negative uses, how they can be spotted, and what the law says about them.

What Are Deepfakes?

The term deepfake comes from “deep learning” (a type of AI model) and “fake.” It refers to synthetic media – images, audio, or video – that have been manipulated or fully generated using artificial intelligence to look and sound real.

Deepfakes use advanced neural networks to replace, modify, or generate faces, voices, or entire scenes. Unlike Photoshop or face-swapping apps, which require manual editing, deepfakes are generated by algorithms trained on large datasets. This allows them to replicate facial expressions, speech patterns, and mannerisms with striking accuracy. Deepfakes are dynamic and highly realistic, making them difficult for the human eye to detect. They adapt and improve over time, making them harder to distinguish from genuine content.

A Brief History of Deepfakes

The idea of using computers to generate synthetic media is not new, but deepfakes as we know them only began to take shape in the early 2010s, when breakthroughs in deep learning made the technology viable. In 2017 and 2018, deepfakes reached mainstream awareness when AI-generated videos of celebrities went viral online. These videos were often created for non-consensual and exploitative purposes, sparking intense debate about the ethical implications of the technology.

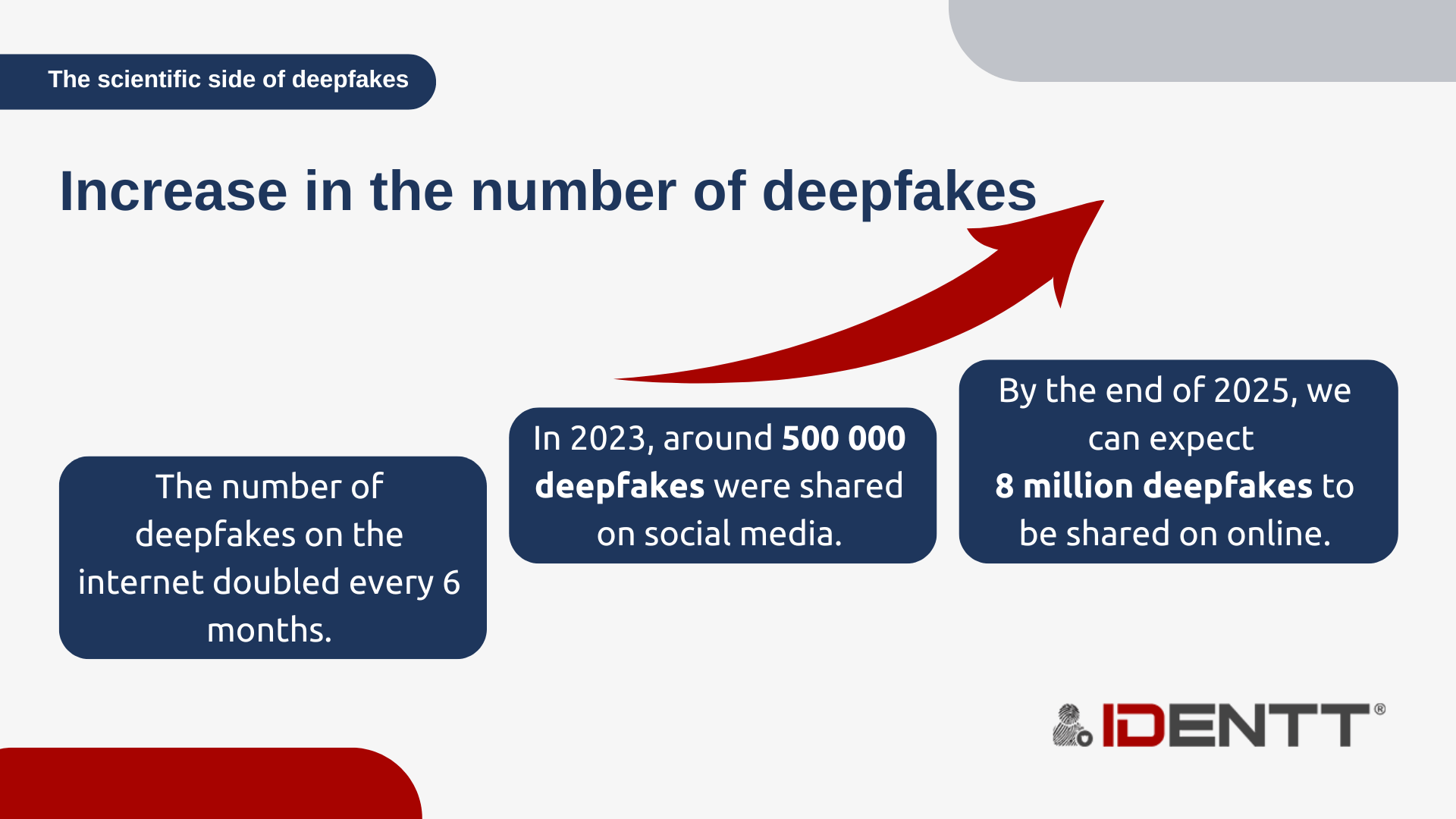

Since then, deepfakes have become more accessible and widespread. Tools and apps that once required specialized knowledge are now available to the public with minimal technical skill. As a result, the technology has expanded beyond entertainment and novelty into more serious domains. Today, deepfakes are used in disinformation campaigns, election interference, corporate fraud, and targeted cyberattacks. Their growing sophistication has turned them into a central concern for cybersecurity professionals, governments, and everyday users alike.

How Are Deepfakes Created?

Deepfakes are primarily created using Generative Adversarial Networks, or GANs. A GAN consists of two neural networks that work against each other to improve results. The first, called the generator, creates fake media, whether it be an image, a voice clip, or a video. The second, called the discriminator, evaluates whether the content looks authentic or not. Over time, the generator improves by learning from its mistakes, creating increasingly realistic output until even the discriminator cannot tell it apart from real media.

The training process requires enormous datasets of real-world examples. To create a convincing video deepfake of a person, the AI needs access to hours of footage showing them speaking, moving, and expressing emotions. For audio deepfakes, the system must be trained on recordings of the person’s voice in different tones and contexts. The larger and more diverse the dataset, the more realistic the final product becomes.

Once trained, the system can produce content so lifelike that it can fool not only casual viewers but also advanced biometric systems. This is why deepfakes are increasingly being used in scams, phishing attempts, and identity fraud.

The Process

The creation of a deepfake typically follows a multi-step process that blends technical sophistication with widely available tools.

- Data collection: Gather photos, videos and audio recordings of the target person, frequently scraped from social media, interviews, or public appearances.

- Data preprocessing: Clean and organize the collected material; extract faces from video frames, isolate voice samples, normalize formats, and prepare datasets for training.

- Model training: Train neural networks—commonly using GANs or similar generative frameworks—on the preprocessed data so the model learns the target’s facial features, expressions and vocal patterns.

- Generation: Run the trained model to produce synthetic images, audio or video, iteratively adjusting parameters to improve realism and reduce obvious artifacts.

- Post-processing: Refine the generated output with editing and enhancement tools to remove glitches, correct lighting or color mismatches, and boost overall quality.

- Time and resources: The time required varies widely—simple, low-quality deepfakes can be produced in seconds with off-the-shelf tools, while high-quality results often require days or weeks of training and powerful hardware.

Types of Deepfakes

Deepfakes can take many forms. Some of the most common include:

- Video Deepfakes – AI-generated videos with face swaps, altered speech, or fabricated scenarios.

- Audio Deepfakes – Synthetic voices imitating real people. (See our full article on audio deepfakes for a deep dive.)

- Image Deepfakes – AI-created or modified photos, used in both art and misinformation.

- Text-Based Deepfakes – AI-generated articles, posts, or messages designed to mislead or spread propaganda.

- 3D & Full-Body Manipulation – Altering not just faces but entire bodies in video or 3D environments.

- Real-Time Deepfakes – Face and voice alterations applied instantly during livestreams or video calls.

- Synthetic Media & AI Avatars – Entirely AI-generated characters or avatars used in gaming, marketing, or social interaction.

Positive Uses of Deepfakes

Despite their reputation, not all deepfakes are harmful. In fact, the technology has many potential benefits when used ethically. In the entertainment industry, deepfakes are being used to create realistic special effects, de-age actors, or even bring deceased performers back to the screen. This reduces production costs and opens new creative possibilities for filmmakers.

In education, deepfakes can bring history to life by recreating historical figures or events in a way that makes them more engaging for students. They can also be used to create realistic training simulations for doctors, pilots, or military personnel, offering immersive and effective learning experiences.

Accessibility is another area where deepfakes can play a positive role. AI avatars can provide sign language interpretation, translate spoken content into multiple languages, or generate personalized communication for individuals with disabilities.

Even in marketing, deepfakes can enable personalized campaigns where content is tailored to individual viewers, making communication more engaging and memorable. They can also help preserve legacies by digitally recreating public figures to share messages with future generations.

The Risks of Deepfakes

The darker side of deepfakes lies in their misuse. One of the most pressing risks is misinformation and disinformation. Deepfakes can be used to create fake speeches, fabricated news clips, or manipulated political content that can sway public opinion and undermine trust in institutions.

Another major risk is fraud. Criminals are increasingly using audio and video deepfakes to impersonate trusted figures in order to authorize fraudulent payments or gain access to sensitive information. This poses a significant threat to businesses and individuals alike.

For example, in 2024 a Singaporean company fell victim to a deepfake impersonation of its CEO: an employee transferred US$25 million believing the request was legitimate, only to discover later the video was synthetic. This underscores how deepfake attacks can bypass traditional verification and lead to massive financial losses. Similarly, Veriff’s 2025 report shows that now 1 in 20 identity verification failures stem from deepfake-based manipulation, indicating that what used to be fringe is now a mainstream threat.

Privacy violations are another concern. Many deepfakes are created without the consent of the individuals depicted, often for harassment, defamation, or non-consensual explicit content. This can have devastating personal and professional consequences for the victims.

Cybersecurity professionals also warn that deepfakes can be used to bypass biometric systems, such as facial recognition, which are increasingly used in identity verification and security. As the technology becomes more sophisticated, the line between authentic and fake media becomes harder to distinguish, eroding trust in digital content altogether.

How to Spot a Deepfake

Spotting deepfakes manually is getting harder, but there are still visual and audio red flags:

- Unnatural facial movements or desynchronized blinking.

- Lighting inconsistencies (shadows, reflections not aligning).

- Blurred edges or flickering around faces.

- Audio-visual mismatch (speech not matching lip movement).

- Background distortions or unrealistic perfection (skin too smooth).

For businesses, manual checks aren’t enough. That’s where technology-driven detection comes in:

- Liveness detection (confirming the presence of a real person).

- Micro-expression analysis.

- Biometric checks like iris or facial structure analysis.

- Neural network–based forensic tools that analyze artifacts invisible to humans.

- Multimodal data analysis to cross-check video, audio, and metadata.

Are Deepfakes Illegal?

Deepfakes themselves are not inherently illegal. Their legality depends on how they are used. If a deepfake is created for entertainment, art, or educational purposes with consent, it is generally lawful. However, using deepfakes for fraud, blackmail, defamation, or identity theft is illegal in most jurisdictions.

The legal landscape is still evolving, with many governments introducing or considering regulations specifically addressing deepfakes. In the meantime, existing laws on cybercrime, harassment, and intellectual property often apply.

However, governments and lawmakers are starting to catch up. In the U.S., the Take It Down Act passed in 2025 seeks to require platforms to remove nonconsensual intimate deepfake content. Meanwhile in Denmark, new legislation is being drafted that would make it illegal to share deepfake or AI-generated content of someone’s likeness without consent, even outside explicit cases. These moves reflect growing recognition that regulation is necessary to protect individual rights and public trust

How IDENTT Protects Against Deepfake Fraud

At IDENTT, we recognize the growing threat posed by deepfakes to identity verification and online security. Our solutions are designed to protect organizations from fraud by combining multiple layers of defense. Liveness detection ensures that a real, live person is present during verification. Biometric analysis compares facial and iris features to verify authenticity. AI-based face comparison helps match ID photos with real users, while neural network detection models flag content that shows signs of being artificially generated.

By combining these techniques, we provide businesses with a powerful defense against deepfake-based fraud. This is especially important in sectors where identity verification is critical, such as banking, insurance, and online services.

Conclusion: Navigating a Deepfake World

While deepfakes offer exciting possibilities for creativity, education, and accessibility, they also carry serious risks for individuals, businesses, and society as a whole.

The best way to navigate this new reality is through a combination of awareness and technology. By understanding how deepfakes work, recognizing their potential dangers, and deploying advanced detection solutions, we can defend ourselves against manipulation and fraud.

The good news is that detection technology is evolving just as fast. At IDENTT, we are committed to helping organizations protect themselves against deepfake-related threats with tools designed to ensure secure and trustworthy identity verification. In a world where seeing is no longer believing, safeguarding authenticity is more important than ever.

Need a custom solution? We’re ready for it.

IDENTT specializes in crafting customized KYC solutions to perfectly match your unique requirements. Get the precise level of verification and compliance you need to enhance security and streamline your onboarding process.